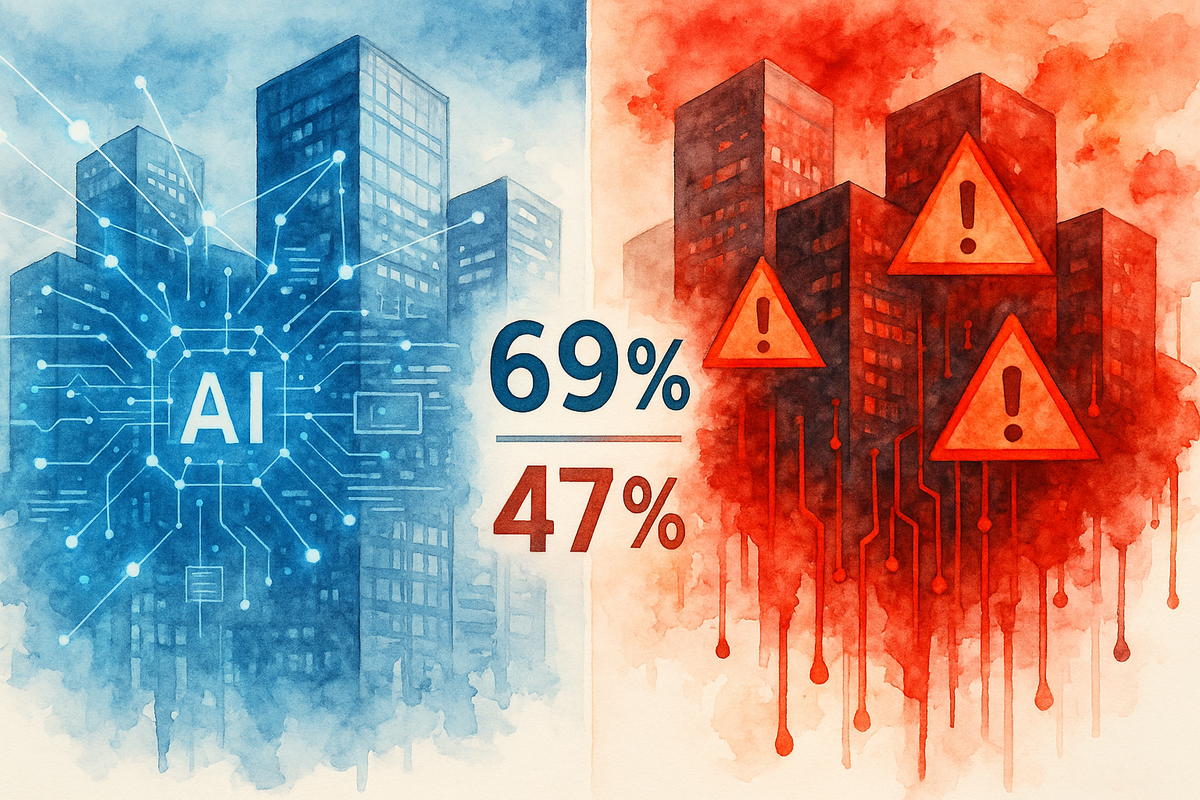

The 69% Security Paradox - Enterprise AI Adoption Outpaces Protection

69% of enterprises cite AI data leaks as their top concern, yet 47% have no security controls. This isn't just a gap—it's organizational cognitive dissonance at enterprise scale.

While OpenAI's 3 million enterprise users and Microsoft's latest Copilot Studio updates dominated headlines, a sobering reality check emerged from security researchers: we're witnessing the largest deployment of unprotected AI systems in enterprise history.

The numbers tell a stark story. BigID's latest research reveals that 69% of organizations cite AI-powered data leaks as their top security concern, yet nearly half (47%) have no AI-specific security controls in place. This isn't just a gap—it's organizational cognitive dissonance at enterprise scale.

Critical Alert: Recent research reveals a dangerous disconnect - while 69% of organizations identify AI data leaks as their primary security concern, nearly half (47%) have implemented no AI-specific security controls. This gap between awareness and action creates immediate vulnerability as enterprises accelerate AI adoption without corresponding protection measures.

Why Are Most Companies Unprepared for AI Security Threats?

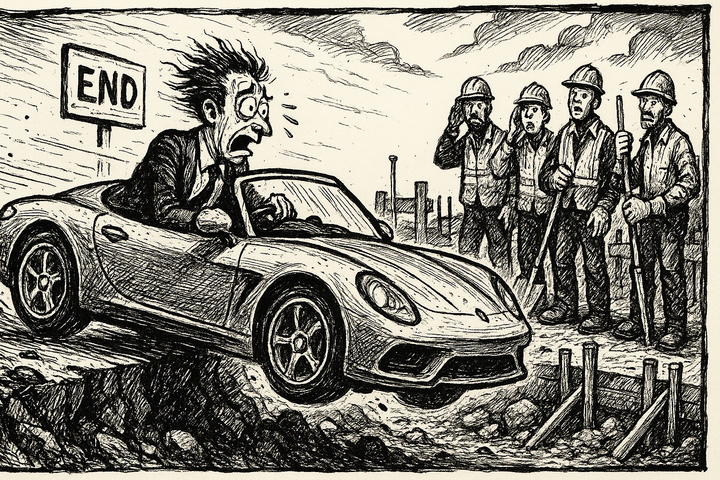

The answer lies in what I call the "AI-first trap"—the same pattern I documented in the 55% regret club, where organizations rush into AI implementation without proper foundations.

Consider the pace of current enterprise AI deployment:

- HPE's $1.1 billion in AI orders, with enterprise AI accounting for one-third

- Microsoft introduced computer use capabilities allowing agents to perform tasks across desktop and web applications

- Organizations are moving from simple chatbots to complex multi-agent systems without understanding the security implications

Meanwhile, Metomic's 2025 security report found that 68% of organizations have experienced data leaks linked to AI tools, yet only 23% have formal security policies in place.

"Enterprises are treating AI security as an afterthought, not a foundation. This is exactly how you end up in the 55% regret club."

The pattern is clear: the same organizations celebrating AI transformation today will be the ones dealing with security incidents tomorrow.

What Security Risks Do Enterprises Face with AI Implementation?

The risks multiply exponentially when organizations deploy AI without proper security frameworks. Here's what I'm seeing in my consulting work:

Multi-Agent Vulnerability Amplification

Microsoft's multi-agent systems sound impressive—agents collaborating by delegating tasks and sharing results. But each connection point creates new attack surfaces.

Recent enterprise security research shows that 64% of organizations have deployed at least one generative AI application with critical security vulnerabilities. When you connect multiple vulnerable agents, you're not just adding risk—you're multiplying it.

The "Quiet Breach" Problem

Perhaps most concerning: security researchers report that organizations have zero visibility into 89% of AI usage, despite having security policies. Employees are connecting company data to AI systems without IT knowledge, creating what security researchers call "prompt leaks."

Real-world examples include:

- Samsung's 2023 ChatGPT incident where employees shared sensitive source code

- Slack's prompt injection vulnerability

"The quiet breach problem is the most dangerous trend I'm seeing. Organizations think they have AI governance when they really have AI chaos."

Data Dependency Vulnerabilities

Unlike traditional software, AI systems are vulnerable to data poisoning, data leakage, and integrity attacks throughout their entire lifecycle. Treasury's established security guidance warns that "AI systems are more vulnerable to these concerns than traditional software systems because of the dependency of an AI system on the data used to train and test it."

How Can Organizations Close the AI Security Gap?

Based on my work with enterprise clients, here's the framework that actually works:

1. Implement Human-First Governance Before AI-First Deployment

This connects directly to the human-AI hybrid workforce approach I've detailed. You need human oversight protocols before you deploy multi-agent systems.

Start with these questions:

- Who has authority to approve AI system connections to company data?

- What's your incident response plan when an AI agent accesses unauthorized information?

- How do you audit AI decision-making in regulated environments?

2. Develop AI-Specific Risk Management Frameworks

BigID's research shows only 6% of organizations have an advanced AI security strategy. This isn't because AI security is impossible—it's because organizations are trying to retrofit traditional IT security frameworks onto fundamentally different technology.

The frameworks that work integrate:

- Model risk management protocols

- Data lifecycle security controls

- Multi-agent coordination governance

- Real-time monitoring and anomaly detection

3. Focus on Financial Services and Regulated Industries First

Financial services firms face particular vulnerability. Despite handling highly sensitive data, only 38% have AI-specific protections.

"Financial services provide the perfect case study because regulatory requirements create clear accountability standards, yet most firms are deploying AI systems faster than they're building security controls."

This industry provides a perfect case study because:

- Regulatory requirements create clear accountability standards

- Data breaches have immediate, measurable financial impact

- Multi-agent systems are being deployed for fraud detection and customer service

The Path Forward: Building Security-First AI Implementation

The security paradox isn't inevitable. Organizations that build proper foundations can deploy AI systems safely and effectively. This requires what I call "security-conscious AI transformation"—the approach detailed in our Microsoft 365 AI enterprise implementation guide.

Key principles include:

- Security by design: Implement protection controls before deploying AI capabilities

- Human oversight protocols: Ensure agent boss skills are developed alongside technical deployment

- Phased rollout approaches: Start with low-risk use cases and expand based on security maturity

- Continuous monitoring: Treat AI security as an ongoing process, not a one-time implementation

The organizations getting this right understand that AI security isn't about slowing down innovation—it's about ensuring innovation doesn't blow up in your face.

What Framework Should Enterprises Use for AI Risk Management?

Based on successful implementations I've guided, the most effective approach combines:

Immediate Actions (Next 30 Days):

- Conduct comprehensive AI usage audit across all departments

- Implement temporary restrictions on AI tool connections to sensitive data

- Establish AI governance committee with cross-functional representation

Medium-term Implementation (90 Days):

- Deploy AI-specific security monitoring tools

- Create formal AI usage policies with enforcement mechanisms

- Begin training programs on secure AI practices

Long-term Transformation (12 Months):

- Integrate AI security into enterprise risk management frameworks

- Develop internal AI security expertise and capabilities

- Establish continuous improvement processes for AI risk management

The key insight: organizations that treat AI security as an enabler of innovation, not a barrier, achieve both better security outcomes and faster, more successful AI adoption.

This connects to the broader transformation we're seeing as we're at an AI inflection point where security-conscious implementation separates winners from the growing ranks of those learning expensive lessons.

Unlike Duolingo's AI-first disaster where replacement strategies failed, organizations following the frontier firm approach understand that security and human oversight create the foundation for sustainable AI transformation.

This transformation isn't simple, and you don't have to figure it out alone. The 69% who recognize the threat and the 47% who lack protection represent an opportunity—for organizations willing to build proper foundations before rushing into complex AI deployments.

Subscribe to my newsletter so you don't miss insights that could transform your approach to AI security and implementation.

If this analysis resonated with you, share it with someone who's wrestling with similar AI security challenges. Your insights in the comments could help others navigate this complexity.

Consider sharing this with your LinkedIn network—the security paradox affects organizations across every industry, and your perspective could spark important conversations about building secure AI foundations.

Looking to build security-conscious AI transformation in your organization? Groktopus helps enterprises navigate complex AI implementations with the security frameworks and human-first approaches that prevent costly mistakes. Let's discuss how to deploy AI systems that enhance capability without compromising security.

Comments ()