The AI Code Generation Process Paradox: Why 88% of Pilots Fail (And How the Other 12% Succeed)

88% of AI code generation pilots fail. The winners treat it as process transformation, not tool implementation—achieving 3x better adoption rates.

You've selected the perfect AI coding tool. Maybe it's GitHub Copilot, Cursor, or Claude. Your developers are excited. The demos look magical. Yet six months later, you're part of the 88% whose AI code generation pilot never made it to production.

The tools work—that's not the problem. Organizations that treat AI code generation as a process challenge rather than a technology challenge achieve 3x better adoption rates. The difference between success and failure isn't about which AI model you choose. It's about recognizing that AI code generation represents a fundamental transformation in how software gets built, not just a new IDE plugin.

The Tool Selection Trap

Every failed AI code generation initiative starts the same way: endless debates about GitHub Copilot vs Cursor vs Claude vs Amazon Q. Teams compare features, run benchmarks, and argue about pricing models. Meanwhile, they're missing the fundamental challenge.

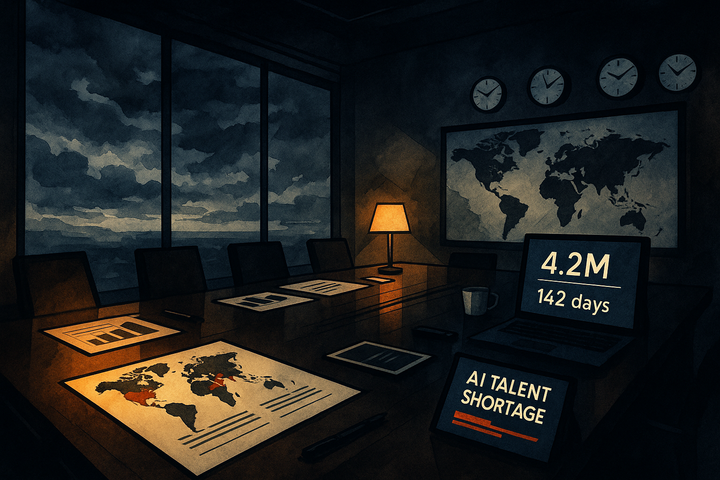

Unclear objectives, insufficient data readiness, and a lack of in-house expertise are sinking many AI proofs of concept. But here's what's more telling: In one IDC study, for every 33 AI prototypes a company built, only 4 made it into production—an 88% failure rate for scaling AI initiatives.

The evidence is overwhelming. Whether you're looking at overall AI initiatives where between 70-85% of GenAI deployment efforts are failing to meet their desired ROI, or specifically at code generation tools, the pattern holds: Technology selection isn't the bottleneck—process transformation is.

Consider this real-world example: A leading automotive manufacturer built an AI tool to help engineers find part numbers through conversational interface. It failed spectacularly. Why? For decades, engineers had been finding parts just fine with basic keyword search. The AI solution solved a non-existent problem while ignoring the real workflow challenges engineers faced daily.

The Process Reality

What separates the successful 12% from the failing majority? They understand that AI code generation isn't just faster typing—it's a new way of building software that requires systematic organizational change.

Teams without proper AI prompting training see 60% lower productivity gains compared to those with structured education programs. This gap isn't about teaching developers to write better prompts. It's about fundamentally rethinking how code gets created, reviewed, and deployed.

Consider what successful organizations actually do differently. They follow what DX Research calls the 8-step framework for AI code generation success:

1. Establish Clear Governance Policies

Successful teams don't just distribute licenses and hope for the best. They create comprehensive usage guidelines that specify when AI is appropriate, how generated code gets approved, and what documentation standards apply. Without these guardrails, "Gen AI POCs in the enterprise are getting approved much more easily than other technologies in general," mostly because of CEO and board pressure, leading to proliferation without purpose.

2. Prioritize Code Review and Quality Assurance

The Qodo "State of AI Code Quality" research reveals why this matters: When teams report "considerable" productivity gains, 70% also report better code quality—a 3.5× jump over stagnant teams. With AI review in the loop, quality improvements soar to 81%. The teams achieving these results don't just review AI code—they've redesigned their review process for AI's unique failure modes.

3. Ensure Data Privacy and Security

AI models trained on public repositories can leak patterns and suggest vulnerable implementations. Organizations need explicit policies about what can be shared with AI services, plus technical controls preventing accidental exposure of proprietary logic or sensitive data.

4. Provide Comprehensive Training

DX's research found that "AI-driven coding requires new techniques many developers do not know yet". The gap between having AI tools and using them effectively is massive. Successful organizations invest in teaching advanced techniques like meta-prompting (embedding instructions within prompts) and prompt chaining (using one output as the next input).

5. Integrate with Existing Workflows

According to DX's research on AI code assistant adoption, the highest-ROI use cases, in order, are:

- Stack trace analysis

- Refactoring existing code

- Mid-loop code generation

- Test case generation

- Learning new techniques

Teams that start here see immediate value, building momentum for broader adoption.

6. Monitor and Measure Impact

Without measurement, you're flying blind. Track adoption rates, productivity metrics, code quality indicators, and bug rates in AI-generated sections. DX provides frameworks for measuring AI's impact that connect tool usage to business outcomes.

7. Stay Updated with AI Advancements

The landscape changes monthly. New models, capabilities, and pricing structures emerge constantly. Create formal evaluation processes rather than reactive adoption. Compare tools systematically based on your specific needs.

8. Foster a Culture of Continuous Learning

As DX's leadership guidance notes: "Developers who leverage AI will outperform those who resist adoption". Position AI as career enhancement, not job replacement.

The Developer Trust Gap

Even with perfect processes, there's a human challenge that can derail everything: developer trust. The Qodo "State of AI Code Quality" report reveals a striking reality: 76% of developers fall into the "red zone"—experiencing frequent hallucinations with low confidence in AI-generated code.

Think about that. Three-quarters of developers using AI tools don't trust the output. Only 3.8% report both low hallucination rates AND high confidence. This isn't a tool problem—it's a process problem.

The trust crisis becomes clearer when you understand what developers actually experience. MIT researchers found that today's AI interaction is "a thin line of communication." When developers ask for code, they receive large, unstructured files with superficial tests. "Without a channel for the AI to expose its own confidence—'this part's correct … this part, maybe double-check'—developers risk blindly trusting hallucinated logic that compiles, but collapses in production."

The solution? Systematic approaches to building trust:

- Mandatory AI code review: Not just checking syntax, but verifying logic and integration points

- Confidence indicators: Teaching developers to recognize when AI is likely hallucinating

- Gradual adoption: Starting with low-risk use cases and building confidence over time

- Transparency: Acknowledging AI's limitations rather than overselling capabilities

The Success Pattern

What do the successful 12% actually look like in practice? Let's examine real enterprise implementations:

Accenture's Systematic Approach

Accenture's implementation with GitHub Copilot provides a blueprint for success. In their randomized controlled trial:

- Over 80% of participants successfully adopted GitHub Copilot with a 96% success rate among initial users

- 67% of participants used GitHub Copilot at least 5 days per week, averaging 3.4 days of usage weekly

- 84% increase in successful builds, indicating not just more pull requests but higher quality code

- Developers retained 88% of GitHub Copilot-generated code in production

What made Accenture different? They didn't just distribute licenses. They conducted comprehensive adoption analysis, tracked installation rates, measured code acceptance rates, and surveyed developers to understand workflow integration. As their study notes: "Success was determined by whether they accepted a suggestion from GitHub Copilot or not"—focusing on actual usage, not just access.

Industry Leaders Seeing Real Results

Other enterprises report similar success when focusing on process:

- Carvana: Alex Devkar, SVP of Engineering and Analytics, reports: "The GitHub Copilot coding agent fits into our existing workflow and converts specifications to production code in minutes. This increases our velocity and enables our team to channel their energy toward higher-level creative work."

- Indra: This aerospace and defense company found that "With very little experience in the language I was coding in, GitHub Copilot helped me generate a fully-functional class in less than three minutes," with developers reporting 70 lines of code generated effectively.

- Future Processing: Their case study showed developers experienced a 34% speed increase when writing new code and 38% when writing unit tests, with 96% of developers saying Copilot sped up their everyday work.

The patterns among successful organizations include:

1. Executive Commitment Beyond Buzzwords

Research shows that in 2022, the average employee experienced 10 planned enterprise changes—up from just two in 2016. Adding AI to this change fatigue requires genuine leadership commitment, not just innovation theater.

2. Investment in Human Capability

Success requires understanding what developers actually need, not what looks impressive in demos. Organizations with strong AI change management are 60% more likely to achieve ROI.

3. Realistic Expectations

DX's research with 38,000 participants found median time savings of 4 hours per week—about 10% productivity improvement. That aligns with Google CEO Sundar Pichai's estimate of 10% productivity increase and field experiments showing a 26.08% increase in completed tasks. Significant but not the 10x transformation some vendors promise. Organizations setting realistic goals achieve them; those chasing moonshots fail.

4. Process-First Thinking

As MIT's comprehensive research emphasizes: "Software already underpins finance, transportation, health care, and the minutiae of daily life, and the human effort required to build and maintain it safely is becoming a bottleneck." The successful minority understand they're solving a business process challenge, not implementing a tool.

Beyond Code Generation: The Bigger Picture

Perhaps the most important insight comes from MIT's research team: "code completion is the easy part; the hard part is everything else". This understanding separates organizations that achieve lasting transformation from those stuck in pilot purgatory.

Real software engineering encompasses far more than writing new functions:

- Refactoring that improves design without changing functionality

- Migrations moving millions of lines between languages or frameworks

- Continuous testing including security analysis and performance optimization

- Documentation and knowledge transfer for team scalability

- Debugging complex distributed systems and race conditions

MIT's Solar-Lezama argues that popular narratives often shrink software engineering to "the undergrad programming part: someone hands you a spec for a little function and you implement it." Organizations stuck in this narrow view will never succeed with AI code generation because they're optimizing for the wrong problem.

The Window of Opportunity

Why act now? The data shows we're at an inflection point. GitHub's 2024 survey found that 82% of developers use AI coding assistants daily or weekly—these tools have moved from experimentation to core workflow. But there's a crucial gap: while 97% have tried AI tools, companies actively encouraging adoption range from only 59% (Germany) to 88% (US).

This gap represents opportunity. Organizations building systematic approaches now will have insurmountable advantages as the technology matures. Early adopters are already seeing compounding benefits:

- High-confidence engineers are 1.3x more likely to say AI makes their job more enjoyable

- Field experiments with 4,867 developers show a 26.08% increase in completed tasks among developers using AI tools

- Teams using AI for test generation report 2x higher confidence in their test coverage

- Accenture saw an 84% increase in successful builds with proper implementation

But these benefits only materialize with proper process transformation. McKinsey's analysis suggests that "organizations may only realize the benefits of an AI-enabled software PDLC with a fundamental shift in their ways of working." Microsoft research finds that it can take 11 weeks for users to fully realize the satisfaction and productivity gains of using AI tools.

Your Action Plan

If you're among the 88% stuck in pilot purgatory, here's your evidence-based path forward:

Week 1-2: Assess Current State

- Survey developers about their AI tool usage and trust levels (aim to understand your "red zone" percentage)

- Document existing code review and quality processes

- Identify high-impact use cases from DX's prioritized list

Week 3-4: Design Governance Framework

- Create usage guidelines and approval processes

- Establish security and privacy policies based on enterprise best practices

- Define success metrics and measurement systems

Month 2: Launch Training Program

- Focus on advanced prompting techniques that actually matter

- Practice on real use cases from your codebase

- Build internal champions who can train others

Month 3: Pilot with Process Focus

- Start with highest-ROI use cases (likely test generation or refactoring)

- Implement enhanced review processes specifically for AI-generated code

- Measure both adoption and quality metrics using established frameworks

Ongoing: Iterate and Scale

- Regular tool evaluation using systematic comparison methods

- Continuous training as new techniques emerge

- Cultural reinforcement through success stories and recognition

The Choice Is Yours

The data is unequivocal. The patterns are consistent across industries. Organizations face a simple but consequential choice: join the 88% treating AI code generation as a tool implementation, or join the 12% treating it as organizational transformation.

The technology works—that's proven. By integrating AI into the end-to-end software development lifecycle, companies can empower engineers to spend more time on higher-value work. But only if you stop obsessing over which tool to use and start focusing on how to transform your development process.

The winners won't be those with the best AI models. They'll be those who best adapt their human processes to amplify AI's capabilities while mitigating its weaknesses. Real-world implementations at companies from Wendy's to the World Bank show that success comes from systematic transformation, not technology selection.

Because in the end, success with AI code generation isn't about the AI. It's about recognizing that when the fundamental nature of work changes, the processes supporting that work must change too. The tools are ready. The evidence is clear. The only question is: Are you ready to move beyond pilot purgatory into production success?

Start your transformation today. The 12% are waiting.

Comments ()